File compression is possible on Apache web hosts that do not have mod_gzip or mod_deflate enabled, and it’s easier than you might think.

A great explanation of why compression helps the web deliver a better user experience is at betterexplained.com.

Two authoritative articles on the subject are Google’s Performance Best Practices documentation | Enable compression and Yahoo’s Best Practices for Speeding Up Your Web Site | Gzip Components.

Compressing PHP files

If your Apache server does not have mod_gzip or mod_deflate enabled (Godaddy and JustHost shared hosting, for example), you can use PHP to compress pages on-the-fly. This is still preferable to sending uncompressed files to the browser, so don’t worry about the additional work the server has to do to compress the files at each request.

Option 1: PHP.INI using zlib.output_compression

The zlib extension can be used to transparently compress PHP pages on-the-fly if the browser sends an “Accept-Encoding: gzip” or “deflate” header. Compression with zlib.output_compression seems to be disabled on most hosts by default, but can be enabled with a custom php.ini file:

[PHP]

zlib.output_compression = On

Credit: http://php.net/manual/en/zlib.configuration.php

Check with your host for instructions on how to implement this, and whether you need a php.ini file in each directory.

Option 2: PHP using ob_gzhandler

If your host does not allow custom php.ini files, you can add the following line of code to the top of your PHP pages, above the DOCTYPE declaration or first line of output:

<?php if (substr_count($_SERVER['HTTP_ACCEPT_ENCODING'], 'gzip')) ob_start("ob_gzhandler"); else ob_start(); ?>

Credit: GoDaddy.com

For WordPress sites, this code would be added to the top of the theme’s header.php file.

According to php.net, using zlib.output_compression is preferred over ob_gzhandler().

For WordPress or other CMS sites, an advantage of zlib.output_compression over the ob_gzhandler method is that all of the .php pages served will be compressed, not just those that contain the global include (eg.: header.php, etc.).

Running both ob_gzhandler and zlib.output_compression at the same time will throw a warning, similar to:

Warning: ob_start() [ref.outcontrol]: output handler ‘ob_gzhandler’ conflicts with ‘zlib output compression’ in /home/path/public_html/ardamis.com/wp-content/themes/mytheme/header.php on line 7

Compressing CSS and JavaScript files

Because the on-the-fly methods above only work for PHP pages, you’ll need something else to compress CSS and JavaScript files. Furthermore, these files typically don’t change, so there isn’t a need to compress them at each request. A better method is to serve pre-compressed versions of these files. I’ll describe a few different ways to do this, but in both cases, you’ll need to add some lines to your .htaccess file to send user agents the gzipped versions if they support the encoding. This requires that Apache’s mod_rewrite be enabled (and I think it’s almost universally enabled).

Option 1: Compress locally and upload

CSS and JavaScript files can be gzipped on the workstation, then uploaded along with the uncompressed files. Use a utility like 7-Zip (quite possibly the best compression software around, and it’s free) to compress the CSS and JavaScript files using the gzip format (with extension *.gz), then upload them to your server.

For Windows users, here is a handy command to compress all the .css and .js files in the current directory and all sub directories (adjust the path to the 7-Zip executable, 7z.exe, as necessary):

for /R %i in (*.css *.js) do "C:\Program Files (x86)\7-Zip\7z.exe" a -tgzip "%i.gz" "%i" -mx9

Note that the above command is to be run from the command line. The batch file equivalent would be:

for /R %%i in (*.css *.js) do "C:\Program Files (x86)\7-Zip\7z.exe" a -tgzip "%%i.gz" "%%i" -mx9

Option 2: Compress on the server

If you have shell access, you can run a command to create a gzip copy of each CSS and JavaScript file on your site (or, if you are developing on Linux, you can run it locally):

find . -regex ".*\(css\|js\)$" -exec bash -c 'echo Compressing "{}" && gzip -c --best "{}" > "{}.gz"' \;

This may be a bit too technical for many people, but is also much more convenient. It is particularly useful when you need to compress a large number of files (as in the case of a WordPress installation with multiple plugins). Remember to run it every time you automatically update WordPress, your theme, or any plugins, so as to replace the gzip’d versions of any updated CSS and JavaScript files.

The .htaccess (for both options)

Add the following lines to your .htaccess file to identify the user agents that can accept the gzip encoded versions of these files.

<files *.js.gz>

AddType "text/javascript" .gz

AddEncoding gzip .gz

</files>

<files *.css.gz>

AddType "text/css" .gz

AddEncoding gzip .gz

</files>

RewriteEngine on

#Check to see if browser can accept gzip files.

ReWriteCond %{HTTP:accept-encoding} gzip

RewriteCond %{HTTP_USER_AGENT} !Safari

#make sure there's no trailing .gz on the url

ReWriteCond %{REQUEST_FILENAME} !^.+\.gz$

#check to see if a .gz version of the file exists.

RewriteCond %{REQUEST_FILENAME}.gz -f

#All conditions met so add .gz to URL filename (invisibly)

RewriteRule ^(.+) $1.gz [QSA,L]

Credit: opensourcetutor.com

I’m not sure it’s still necessary to exclude Safari.

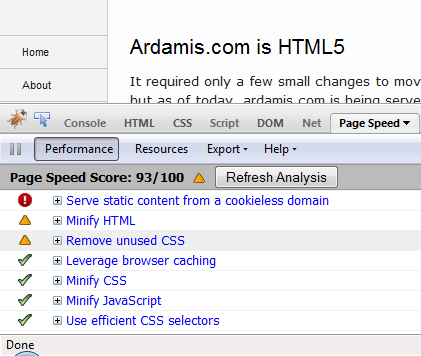

For added benefit, minify the CSS and JavaScript files before gzipping them. Google’s excellent Page Speed Firefox/Firebug Add-on makes this very easy. Yahoo’s YUI Compressor is also quite good.

Verify that your content is being compressed

Use the nifty Web Page Content Compression Verification tool at http://www.whatsmyip.org/http_compression/ to confirm that your server is sending the compressed files.

Speed up page load times for returning visitors

Compression is only part of the story. In order to further speed page load times for your returning visitors, you will want to send the correct headers to leverage browser caching.