I am looking for cheap, shared web hosting because the downtime and customer support I’m getting with JustHost is intollerable (and well documented). I’m willing to pay about $10/month for hosting, and I don’t ask for much other than reasonable uptime. I have a blog (ardamis.com) that gets about 1000 visits per day, a few other sites that barely get any traffic, and a small photo gallery that I share only with family and friends. I don’t stream or make available for download any video or audio files, but I do want to be able to upload all of my personal photos and use my web host as an off-site backup. I have an account with Drop Box, but I have gigs of photos, so I would have to purchase extra storage, and I kinda want to keep the Drop Box stuff separate. I’ve also considered using Google Drive, but I have enough photos that I would need to purchase additional space.

I’m currently paying $108/year for hosting and an additional $19.99/year for a dedicated IP address. I’m willing to pay a little more, but not terribly much more.

Before JustHost, I was a GoDaddy customer for something like 5 years, and there was almost no downtime. Customer service was incredibly, suprisingly good and the reps were always knowlegeable and effective. I left because I didn’t like the proprietary admin panel that GoDaddy has developed and wanted to keep my domain registrar and my web host at separate companies. But really, there was nothing wrong with GoDaddy’s hosting at the time, that I could tell.

The problem with researching new web hosts is that unbiased information (if it’s out there) is buried under tons of completely untrustworthy garbage sites that sell reviews and rankings. If you look for personal recommendations based on experiences with multiple hosts, it is extremely hard to tell from the obscure bulletin board threads whether the posts come from shills or actual customers. Even if one finds posts that appear to be from genuine customers, their descriptions of their experiences are usually subjective, anecdotal, and not comparative.

(As a little aside, BlueHost, HostGator, HostMonster, and JustHost are all owned by the same corporate parent, Endurance International Group. An outage affecting one can affect them all, as described in the Mashable article: Bluehost, HostGator and HostMonster Go Down. So, take these things into consideration if you are trying to choose between them.)

This post, then, is just my notes on what I’ve found while researching my next web host.

No Host

One option would be to not go with a hosting company at all and just use Amazon EC2 to self-host my blog. I have seriously considered doing this, but by some accounts, it’s actually more expensive to run an EC2 instance than purchase shared hosting. And I don’t really want to become my own linux administrator. As much as I enjoy occasionally tinkering with Ubuntu, I just want my sites to run smoothly and for someone to keep the server up-to-date and to fix problems for me quickly if something breaks.

Bluehost

http://www.bluehost.com/

Everyone has heard of Bluehost. They’re huge. Their tagline is “Trusted by Millions as the Best Web Hosting Solution”. So there you have it. Maybe you want to be one of their millions of customers.

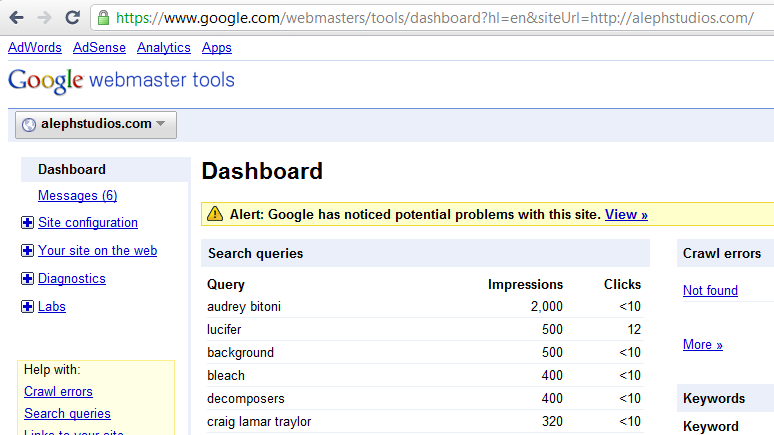

According to the builtwith.com profile for ardamis.com, the site’s hosting provider is not JustHost but BlueHost!

Their website has terribly low production values, for being such a huge company. They have a crappy stock photograph of a dude with a headset providing customer support, below the text “We specialize in customer service. Call or Chat!” I do not want to live chat with this dude, or anyone else. I probably don’t need any customer service from my hosting company unless they screw something up, so prominently featuring your support phone numbers make me wonder if they get tons of calls.

They do have cPanel, which is nice, as I am wary of proprietary admin panels after using GoDaddy for years.

They also offer unlimited domains, unlimited storage, unlimited bandwith, unlimited email accounts, and a free domain. They also offer custom php.ini files and SSH access. And they do all of this for just $6.95/month for 1 year.

It actually sounds pretty decent, on paper. But one does get the sense that they are completely driven by the bottom-line, and that your site is going to be jammed into an already crowded server.

HostGator

http://www.hostgator.com/

HostGator is the other huge discount web hosting company, competing with BlueHost and GoDaddy for what I picture to be the same sort of confused customers or WordPress blog/Google Adsense scammers.

Like with BlueHost, I’m immediately turned off by the website, which is just ugly as all get out and also conspicuously promotes Live Chat support with a stock photograph of a girl with a (wired) headset. The pricing for the hosting plans is also a bit misleading, as all of the pricing shown is discounted 20%, which discount is only valid for the first invoice.

Another thing I really dislike is that there are three tiers of shared hosting, named “Hatchling Plan”, “Baby Plan”, and “Business Plan”. I just can’t see myself signing up for the ridiculously named “Hatchling Plan” or “Baby Plan”. Which is probably by design so that people like me upgrade to the more respectable and grown-up sounding “Business Plan”.

They do offer a single free website transfer for shared hosting, whether or not the site being transferred uses cPanel.

Lithium Hosting

http://www.lithiumhosting.com/

I first heard about Lithium Hosting while reading the ars technica article How to set up a safe and secure Web server, which also mentioned A Small Orange.

Their site looks pretty good, but it’s a little bit too template-like, and I felt that a quick glance on themeforest.net would turn up about a dozen hosting reseller templates for which the layout and stock placeholder text is an almost exact match. For example, the tagline on their home page is “Why we’re not the best host in the world.” Yeah, that sort of false modesty smells just a bit too contrived here. I was almost ready to sign up with Lithium Hosting, but the cookie-cutter stuff gave me enough pause to keep looking.

One of the things that I didn’t like is that they offer a free month coupon code, but it doesn’t work when you configure your cart to pay a year-at-a-time. Another thing I didn’t like was the $36/year cost of a dedicated IP address – $5 to set up and then $3 per month thereafter. It seems like the price on dedicated IP addresses has dramatically increased in the months since all the breathless news reports about the world running out of IPv4 addresses. They also charge an extra $60/year for shell access via SSH. That’s pretty shameful.

Bailing out of the cart just before purchase doesn’t cause a window to pop up an lure you back with a discount, as I fully expected to happen.

I checked out their Facebook page and the most recent post was from a guy who’s website was down. Two other recent posts were about downtime.

At the end of the day, Lithium Hosting just seems too much like a hosting reseller itself than a company that will be around for years.

A Small Orange

I first heard about A Small Orange while reading the same ars technica article How to set up a safe and secure Web server that mentioned Lithium Hosting.

My first impression of the site was that it looked pretty much exactly as I wanted my hosting company to look. Again, I’m being pretty superficial here, but I want to be happy with my choice and if the company’s outward appearance is cruddy then I’m not going to be as satisfied. I want to be certain that I made the best choice, and a crappy, thrown-together-looking site injects a small amount of doubt. The site clearly sets out the costs of the different hosting plans, and I knew I would be looking at shared hosting first. There are five tiers of shared hosting, the least expensive being $35/year for 250 MB storage and 5 GB bandwidth. Basically, the only differentiating factor between the different levels of shared hosting is the amount storage and bandwidth. The standard features for all shared hosting accounts include:

- Unlimited Parked and Addon Domains

- Unlimited Subdomains

- Unlimited POP3/IMAP Mail Accounts

- Unlimited MySQL Databases

- Unlimited FTP Accounts

- Automated Daily Backups

- cPanel

- Automatic Script Installation (Softaculous)

- Jailed shell upon request

- FTP and SFTP access

- Cron jobs for scheduled tasks

- 99.9% uptime guarantee

A Small Orange uses CloudLinux as the server OS.

OK, yes, there is still a Live Chat button, but it’s this inconspicuous little green tab at the left side of the window that states “live help”. That’s it.

I checked out their Facebook page for recent posts, and they were almost entirely positive, with a good amount of interaction from the admin. The page claims that the company is home to 45,000 web sites, which might be exactly what I’m looking for, without even knowing it.

One of the things that ultimately convinced me to go with them was the Inc.com write up of CEO Douglas Hanna in America’s coolest College Start-Ups 2012 and the Duke Chronical article Hanna makes juicy profits with A Small Orange. Hanna worked at HostGator for two years in customer service, so one could reasonably expect that he knows what customers want in affordable hosting.

I have also found a ton of coupon codes for A Small Orange (just Google it) for either $5 or 15% off your order.

saveme$5

saveme15%

save_$5

save_15%